Imagine for a second, that you are an investigative journalist who is working to expose corruption in the high ranks of government. Shortly before you publish your damning exposé, someone alerts you that there is a disturbing video of you circulating online. The video shows you appearing to be enjoying yourself in a hot threesome. You rush to check for yourself because what the hell?! You cannot believe your eyes. There it is – the spitting image of you! Sandwiched between 2 strangers in a horrific deepfake porno. The video was posted anonymously and cannot be traced.

Or imagine it’s a day before the elections. A deepfake voicenote surfaces online; it appears to be from the front-running presidential candidate. In the clip, his voice is heard admitting to a cover-up of the century about Covid-19 vaccines or making shockingly racist and homophobic comments. He denies it, but it sounds exactly like him! Chaos erupts.

Deepfakes have the very real and imminent potential to fortify disinformation, erode our trust in media and journalism and undermine democracy – possibly leaving us completely incapable of distinguishing between what’s real and what’s fake.

What are deepfakes?

The phenomenon of deepfake videos started trending only in 2017 when a Reddit user with the nickname “deepfakes” posted his work where he had swapped Hollywood celebrities’ faces onto similar-looking porn artists’ faces.

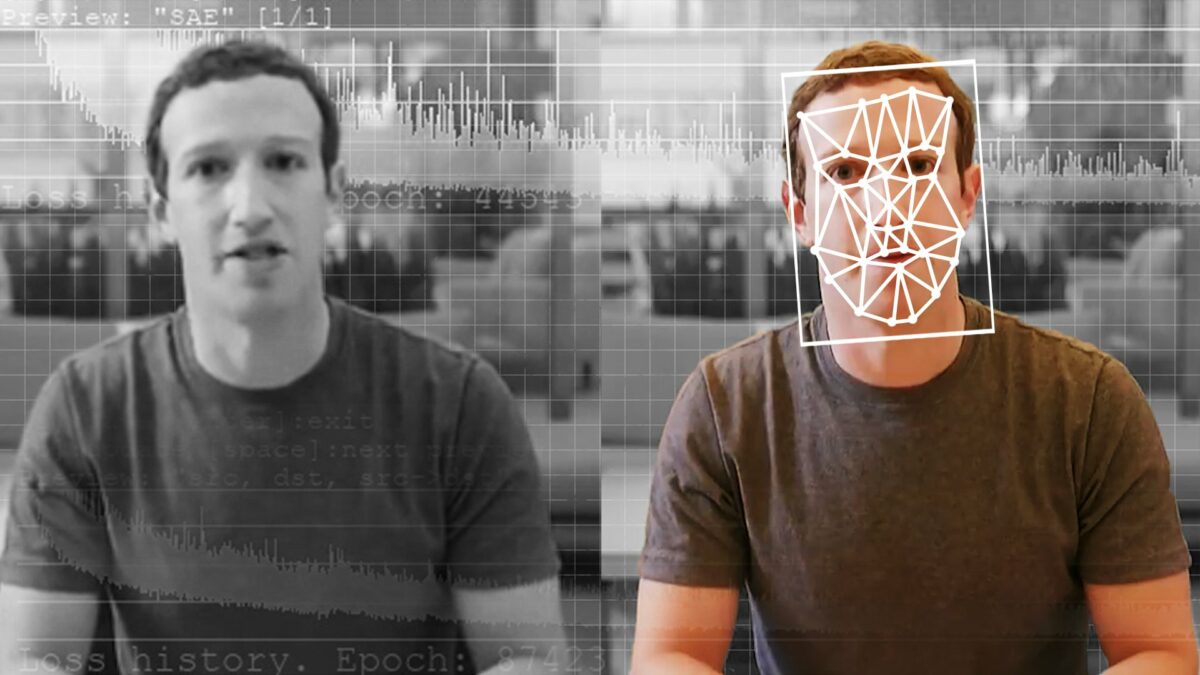

At a most basic level, deepfakes can be understood as fake or synthetic videos and audio generated using artificial intelligence (AI) machine learning techniques. The deepfakes often feature a person appearing to say or do something they have never said or done. The AI algorithm swaps the face, voice, facial expressions, lip and teeth movements, and likeness of one person, and places it on the body of another person.

Applications such as DeepFaceLab, FaceSwap and FaceIt are designed to create deepfakes within a matter of minutes. The software programs act as a training algorithm that will tell the AI which part of the image is the face, how to swap the faces, and how to adjust to match the movement of the face around the frame in a video. The Ai will, on its own, continue to learn, and the result is often a dangerously convincing fake video that looks and sounds real.

The AI can also imitate the sound of a specific human voice using voice cloning technology that is then used to produce synthetic speech that sounds very much like the target person’s voice.

As early as 2019, Samsung had already developed software that can create a highly convincing deepfake with only a single image. But of course, the more images fed to the algorithm, the more convincing the resultant deepfake will be.

What makes deepfakes different?

Manipulation of media is nothing new. We have been editing images since before the days of Photoshop. But if we think about deepfakes in the wider context of the digital disinformation that is currently on the rise (disinformation around the Covid-19 vaccine comes to mind), we see that deepfakes raise several systemic socio-political and legal concerns. They can manipulate public discourse, undermine elections, and threaten national security.

From a legal perspective, deepfakes pose a significant risk of human rights violations, specifically, to the rights to dignity, privacy and freedom of information. Think, for instance, about the impact where deepfake surveillance footage is submitted as evidence before a court, or falsely incriminating footage is published anonymously online. Notably, deepfake porn appears to target women almost exclusively, which lets us know that the risks of deepfakes have a significant gender component.

When it comes to images on the internet and social media, our minds know to be more cautious, not to believe everything we see. We know that the picture may have been edited, photoshopped or retouched. We reserve space at the back of our minds for the possibility that the images we’re seeing on the internet and social media may have been manipulated in some way.

But deepfakes are becoming increasingly difficult to detect, and even when we can detect them, they continue to cause difficulties. For example, the many people who first saw and heard the deepfake and thought it was real, might not have seen or accepted that it is fake. And even where the creator is traced and located, it’s often not clear what charges may be brought against them since technically speaking, creating deepfakes is itself not illegal, except where it amounts to defamation or deepfake porn.

How do we mitigate the impact of deepfakes through policy and regulation? At the moment, deepfakes remain dangerously unregulated. In my view, the impact of deepfakes can be mitigated, in part, through policy and regulation targeting the different stages of a deepfake’s life cycle.

Regulating the AI technology

One way we can mitigate the impact of deepfakes on society is to regulate the underlying AI technology used to generate deepfakes and by creating legal obligations for entities involved in producing this technology. At the time of writing, South Africa did not have a national AI policy or regulation.

Regulating the creation of deepfakes

Like any piece of technology, deepfakes will serve the aims and ideals we give it. As such, some deepfakes applications carry low risk, and can in certain circumstances be a protected form of expression. This will likely be the case where deepfakes are used consensually, or for artistic creativity uses (like a parody).

But to the extent that a deepfake incites imminent violence, or advocates hatred based on race, ethnicity, gender, or religion, or otherwise constitutes incitement to cause harm, it will not be protected under section 16 of the constitution. In instances where the latter is the case, the legislator could develop policy and legislation specifically targeting deepfake content creators, which could include norms and rules around the use of deepfake technology such as labelling obligations, prohibiting certain deepfake applications, and extending criminal sanctions to unlawful deepfake applications.

Regulating the dissemination of deepfakes

Third, we know that media is circulated by users on digital social and media platforms. If we think about introducing limitations on the dissemination of certain deepfakes, then online platforms have a crucial role to play here. And since the extent of the dissemination also determines the scale and severity of a deepfake’s impact, platforms should also have legal obligations, including having deepfake detection software in place.

The targeted individual

Where an individual is a target of a deepfake, their constitutional rights are in theory protected by law, but putting this into practice may prove difficult, especially in the absence of specific regulation on how deepfakes will be treated under the law. Notably, however, the Protection of Private Information Act makes it an offence to process a data subject’s personal data in this way, and without their consent. The offense is punishable by 12 months’ imprisonment, a fine or both. If the deepfake amounts to defamation, an individual can, under common law, institute a defamation action, but that’s if you can trace the perpetrator!

In the instance of deepfake porn, however, section 16 of the Cybercrimes Act, 2021 makes it a crime to publish anyone’s real or simulated nudes without their consent. While this section is, in some measure, broad enough to respond to deepfake porn, the Act itself doesn’t seem to be responsive to the threats leveled by deepfakes more broadly.

There’s a number of interconnected social conditions that have created a breeding ground for deepfakes: the rise of the internet, the rise of AI, the availability of massive quantities of audio-visual data on the internet, the growing primacy of visual communication and the increasing spread of disinformation. These conditions, coupled with social media’s capacity to fuel the spread of disinformation, often anonymously, mean that a creator of a malicious deepfake will likely succeed.

The government’s strategy with technological developments has always been reactive. However, there are a few things we each can do in our own personal capacity to mitigate the impact of deepfakes.

If a specific media content stimulates intense emotions in you, it is likely that you’re being manipulated. Pause. Breath. In those circumstances, you should refrain from circulating the content until you are satisfied that it is not fake. Be more circumspect – inspect the content for any observable inconsistencies or clues that might indicate manipulation. If you come across a deepfake, report it on the media platform. Lastly, watch an example of a deepfake video on Youtube to see for yourself how convincing they are.